- Research Article

- Open access

- Published:

Sequential Monte Carlo Methods for Joint Detection and Tracking of Multiaspect Targets in Infrared Radar Images

EURASIP Journal on Advances in Signal Processing volume 2008, Article number: 217373 (2007)

Abstract

We present in this paper a sequential Monte Carlo methodology for joint detection and tracking of a multiaspect target in image sequences. Unlike the traditional contact/association approach found in the literature, the proposed methodology enables integrated, multiframe target detection and tracking incorporating the statistical models for target aspect, target motion, and background clutter. Two implementations of the proposed algorithm are discussed using, respectively, a resample-move (RS) particle filter and an auxiliary particle filter (APF). Our simulation results suggest that the APF configuration outperforms slightly the RS filter in scenarios of stealthy targets.

1. Introduction

This paper investigates the use of sequential Monte Carlo filters [1] for joint multiframe detection and tracking of randomly changing multiaspect targets in a sequence of heavily cluttered remote sensing images generated by an infrared airborne radar (IRAR) [2]. For simplicity, we restrict the discussion primarily to a single target scenario and indicate briefly how the proposed algorithms could be modified for multiobject tracking.

Most conventional approaches to target tracking in images [3] are based on suboptimal decoupling of the detection and tracking tasks. Given a reference target template, a two-dimensional (2D) spatial matched filter is applied to a single-frame of the image sequence. The pixel locations where the output of the matched filter exceeds a pre-specified threshold are treated then as initial estimates of the true position of detected targets. Those preliminary position estimates are subsequently assimilated into a multiframe tracking algorithm, usually a linearized Kalman filter, or alternatively discarded as false alarms originating from clutter.

Depending on its level of sophistication, the spatial matched filter design might or might not take into account the spatial correlation of the background clutter and random distortions of the true target aspect compared to the reference template. In any case, however, in a scenario with dim targets in heavily cluttered environments, the suboptimal association of a single-frame matched filter detector and a multiframe linearized tracking filter is bound to perform poorly [4].

As an alternative to the conventional approaches, we introduced in [5, 6] a Bayesian algorithm for joint multiframe detection and tracking of known targets, fully incorporating the statistical models for target motion and background clutter and overcoming the limitations of the usual association of single-frame correlation detectors and Kalman filter trackers in scenarios of stealthy targets. An improved version of the algorithm in [5, 6] was later introduced in [7] to enable joint detection and tracking of targets with unknown and randomly changing aspect. The algorithms in [5–7] were however limited by the need to use discrete-valued stochastic models for both target motion and target aspect changes, with the "absent target" hypothesis treated as an additional dummy aspect state. A conventional hidden Markov model (HMM) filter was used then to perform joint minimum probability of error multiframe detection and maximum a posteriori (MAP) tracking for targets that were declared present in each frame. A smoothing version of the joint multiframe HMM detector/tracker, based essentially on a 2D version of the forward-backward (Baum-Welch) algorithm, was later proposed in [4]. Furthermore, we also proposed in [4] an alternative tracker based on particle filtering [1, 8] which, contrary to the original HMM tracker in [7], assumed a continuous-valued kinematic (position and velocity) state and a discrete-valued target aspect state. However, the particle filter algorithm in [4] enabled tracking only (assuming that the target was always present in all frames) and used decoupled statistically independent models for target motion and target aspect.

To better capture target motion, we drop in this paper the previous constraint in [5–7] and, as in the later sections of [4], allow the unknown 2D position and velocity of the target to be continuous-valued random variables. The unknown target aspect is still modeled however as a discrete random variable defined on a finite set  , where each symbol is a pointer to a possibly rotated, scaled, and/or sheared version of the target's reference template. In order to integrate detection and tracking, building on our previous HMM work in [7], we extend the set

, where each symbol is a pointer to a possibly rotated, scaled, and/or sheared version of the target's reference template. In order to integrate detection and tracking, building on our previous HMM work in [7], we extend the set  to include an additional dummy state that represents the absence of a target of interest in the scene. The evolution over time of the target's kinematic and aspect states is described then by a coupled stochastic dynamic model where the sequences of target positions, velocities, and aspects are mutually dependent.

to include an additional dummy state that represents the absence of a target of interest in the scene. The evolution over time of the target's kinematic and aspect states is described then by a coupled stochastic dynamic model where the sequences of target positions, velocities, and aspects are mutually dependent.

Contrary to alternative feature-based trackers in the literature, the proposed algorithm in this paper detects and tracks the target directly from the raw sensor images, processing pixel intensities only. The clutter-free target image is modeled by a nonlinear function that maps a given target centroid position into a spatial distribution of pixels centered around the (quantized) centroid position, with shape and intensity being dependent on the current target aspect. Finally, the target is superimposed to a structured background whose spatial correlation is captured by a noncausal Gauss-Markov random field (GMRf) model [9–11]. The GMRf model parameters are adaptively estimated from the observed data using an approximate maximum likelihood (AML) algorithm [12].

Given the problem setup described in the previous pa ragraph, the optimal solution to the integrated detection/ tracking problem requires the recursive computation at each frame  of the joint posterior distribution of the target's kinematic and aspect states conditioned on all observed frames from instant

of the joint posterior distribution of the target's kinematic and aspect states conditioned on all observed frames from instant  up to instant

up to instant  . Given, however, the inherent nonlinearity of the observation and (possibly) motion models, the exact computation of that posterior distribution is generally not possible. We resort then to mixed-state particle filtering [13] to represent the joint posterior by a set of weighted samples (or particles) such that, as the number of particles goes to infinity, their weighted average converges (in some statistical sense) to the desired minimum mean-square error (MMSE) estimate of the hidden states. Following a sequential importance sampling (SIS) [14] approach, the particles may be drawn recursively from the coupled prior statistical model for target motion and aspect, while their respective weights may be updated recursively using a likelihood function that takes into account the models for the target's signature and for the background clutter.

. Given, however, the inherent nonlinearity of the observation and (possibly) motion models, the exact computation of that posterior distribution is generally not possible. We resort then to mixed-state particle filtering [13] to represent the joint posterior by a set of weighted samples (or particles) such that, as the number of particles goes to infinity, their weighted average converges (in some statistical sense) to the desired minimum mean-square error (MMSE) estimate of the hidden states. Following a sequential importance sampling (SIS) [14] approach, the particles may be drawn recursively from the coupled prior statistical model for target motion and aspect, while their respective weights may be updated recursively using a likelihood function that takes into account the models for the target's signature and for the background clutter.

We propose two different implementations for the mixed-state particle filter detector/tracker. The first implementation, which was previously discussed in a conference paper (see [15]) is a resample-move (RS) filter [16] that uses particle resampling [17] followed by a Metropolis-Hastings move step [18] to combat both particle degeneracy and particle impoverishment (see [8]). The second implementation, which was not included in [15], is an auxiliary particle filter (APF) [19] that uses the current observed frame at instant  to preselect those particles at instant

to preselect those particles at instant  which, when propagated through the prior dynamic model, are more likely to generate new samples with high likelihood. Both algorithms are original with respect to the previous particle filtering-based tracking algorithm that we proposed in [4], where the problem of joint detection and tracking with coupled motion and aspect models was not considered.

which, when propagated through the prior dynamic model, are more likely to generate new samples with high likelihood. Both algorithms are original with respect to the previous particle filtering-based tracking algorithm that we proposed in [4], where the problem of joint detection and tracking with coupled motion and aspect models was not considered.

Related Work and Different Approaches in the Literature

Following the seminal work by Isard and Blake [20], particle filters have been extensively applied to the solution of visual tracking problems. In [21], a sequential Monte Carlo algorithm is proposed to track an object in video subject to model uncertainty. The target's aspect, although unknown, is assumed, however, to be fixed in [21], with no dynamic aspect change. On the other hand, in [22], an adaptive appearance model is used to specify a time-varying likelihood function expressed as a Gaussian mixture whose parameters are updated using the EM [23] algorithm. As in our work, the algorithm in [22] also processes image intensities directly, but, unlike our problem setup, the observation model in [22] does not incorporate any information about spatial correlation of image pixels, treating instead each pixel as independent observations. A different Bayesian algorithm for tracking nonrigid (randomly deformable) objects in three-dimensional images using multiple conditionally independent cues is presented in [24]. Dynamic object appearance changes are captured by a mixed-state shape model [13] consisting of a discrete-valued cluster membership parameter and a continuous-valued weight parameter. A separate kinematic model is used in turn to describe the temporal evolution of the object's position and velocity. Unlike our work, the kinematic model in [24] is assumed statistically independent of the aspect to model.

Rather than investigating solutions to the problem of multiaspect tracking of a single target, several recent references, for example, [25, 26], use mixture particle filters to tackle the different but related problem of detecting and tracking an unknown number of multiple objects with different but fixed appearance. The number of terms in the nonparametric mixture model, that represents the posterior of the unknowns, is adaptively changed as new objects are detected in the scene and initialized with a new associated observation model. Likewise, the mixture weights are also recursively updated from frame to frame in the image sequence.

Organization of the Paper

The paper is divided into 6 sections. Section 1 is this introduction. In Section 2, we present the coupled model for target aspect and motion and review the observation and clutter models focusing on the GMRf representation of the background and the derivation of the associated likelihood function for the observed (target + clutter) image. In Section 3, we detail the proposed detector/tracker in the RS and APF configurations. The performance of the two filters is discussed in Section 4 using simulated infrared airborne radar (IRAR) data. A preliminary discussion on multitarget tracking is found in Section 5, followed by an illustrative example with two targets. Finally, we present in Section 6 the conclusions of our work.

2. The Model

In the sequel, we present the target and clutter models that are used in this paper. We use lowercase letters to denote both random variables/vectors and realizations (samples) of random variables/vectors; the proper interpretation is implied in context. We use lowercase  to denote probability density functions (pdfs) and uppercase

to denote probability density functions (pdfs) and uppercase  to denote the probability mass functions (pmfs) of discrete random variables. The symbol

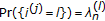

to denote the probability mass functions (pmfs) of discrete random variables. The symbol  is used to denote the probability of an event

is used to denote the probability of an event  in the

in the  -algebra of the sample space.

-algebra of the sample space.

State Variables

Let  be a nonnegative integer number and let superscript

be a nonnegative integer number and let superscript  denote the transpose of a vector or matrix. The kinematic state of the target at frame

denote the transpose of a vector or matrix. The kinematic state of the target at frame  is defined as the four-dimensional continuous (real-valued) random vector

is defined as the four-dimensional continuous (real-valued) random vector  that collects the positions,

that collects the positions,  and

and  , and the velocities,

, and the velocities,  and

and  , of the target's centroid in a system of 2D Cartesian coordinates

, of the target's centroid in a system of 2D Cartesian coordinates  . On the other hand, the target's aspect state at frame

. On the other hand, the target's aspect state at frame  , denoted by

, denoted by  , is assumed to be a discrete random variable that takes values in the finite set

, is assumed to be a discrete random variable that takes values in the finite set  where the symbol "

where the symbol " " is a dummy state that denotes that the target is absent at frame

" is a dummy state that denotes that the target is absent at frame  , and each symbol

, and each symbol  ,

,  , is in turn a pointer to one possibly rotated, scaled, and/or sheared version of the target's reference template.

, is in turn a pointer to one possibly rotated, scaled, and/or sheared version of the target's reference template.

2.1. Target Motion and Aspect Models

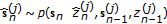

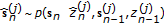

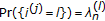

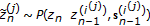

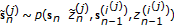

The random sequence  , is modeled as first-order Markov process specified by the pdf of the initial kinematic state

, is modeled as first-order Markov process specified by the pdf of the initial kinematic state  , the transition pdf

, the transition pdf  , the transition probabilities

, the transition probabilities

,

,  , and the initial probabilities

, and the initial probabilities  ,

,  .

.

Aspect Change Model

Assume that, at any given frame, for any aspect state  , the clutter-free target image lies within a bounded rectangle of size

, the clutter-free target image lies within a bounded rectangle of size  . In this notation,

. In this notation,  and

and  denote the maximum pixel distances in the target image when we move away, respectively, up and down, from the target centroid. Analogously,

denote the maximum pixel distances in the target image when we move away, respectively, up and down, from the target centroid. Analogously,  and

and  are the maximum horizontal pixel distances in the target image when we move away, respectively, left and right, from the target centroid.

are the maximum horizontal pixel distances in the target image when we move away, respectively, left and right, from the target centroid.

Assume also that each image frame has the size of  pixels. We introduce next the extended grid

pixels. We introduce next the extended grid that contains all possible target centroid locations for which at least one target pixel still lies in the sensor image. Next, let

that contains all possible target centroid locations for which at least one target pixel still lies in the sensor image. Next, let  be a matrix of size

be a matrix of size  such that

such that  for any

for any  and

and

Assuming that a transition from a "present target" state to the "absent target" state can only occur when the target moves out of the image, we model the probability of a change in the target's aspect from the state  to the state

to the state  ,

,  , as

, as

where the two-dimensional vector  denotes the quantized target centroid position defined on the extended image grid and obtained from the four-dimensional continuous kinematic state

denotes the quantized target centroid position defined on the extended image grid and obtained from the four-dimensional continuous kinematic state  by making

by making

where  and

and  are the spatial resolutions of the image, respectively, in the directions

are the spatial resolutions of the image, respectively, in the directions  and

and  . The parameter

. The parameter  in (2) denotes in turn the probability of a new target entering the image once the previous target became absent. For simplicity, we restrict the discussion in this paper to the situation where there is at most one single target of interest present in the scene at each image frame. The specification

in (2) denotes in turn the probability of a new target entering the image once the previous target became absent. For simplicity, we restrict the discussion in this paper to the situation where there is at most one single target of interest present in the scene at each image frame. The specification  ,

,  , corresponds to assuming the worst-case scenario where, given that a new target entered the scene, there is a uniform probability that the target will take any of the

, corresponds to assuming the worst-case scenario where, given that a new target entered the scene, there is a uniform probability that the target will take any of the  possible aspect states. Finally, the term

possible aspect states. Finally, the term  in (2) is the probability of a target moving out of the image at frame

in (2) is the probability of a target moving out of the image at frame  given its kinematic and aspect states at frame

given its kinematic and aspect states at frame  .

.

Motion Model

For simplicity, we assume that, except in the situation where there is a transition from the "absent target" state to the "present target" state, the conditional pdf  is independent of the current and previous aspect states, respectively,

is independent of the current and previous aspect states, respectively,  and

and  . In other words, unless

. In other words, unless  and

and  , we make

, we make

where  is an arbitrary pdf (not necessarily Gaussian) that models the target motion. Otherwise, if

is an arbitrary pdf (not necessarily Gaussian) that models the target motion. Otherwise, if  and

and  , we reset the target's position and make

, we reset the target's position and make

where  is typically a noninformative (e.g., uniform) prior pdf defined in a certain region (e.g., upper-left corner) of the image grid. Given the independence assumption in (4), it follows that, for any

is typically a noninformative (e.g., uniform) prior pdf defined in a certain region (e.g., upper-left corner) of the image grid. Given the independence assumption in (4), it follows that, for any

2.2. Observation Model and Likelihood Function

Next, we discuss the target observation model. Previous references mentioned in Section 1, for example, [21, 22, 24–26], are concerned mostly with video surveillance of near objects (e.g., pedestrian or vehicle tracking), or other similar applications (e.g., face tracking in video). For that class of applications, effects such as object occlusion are important and must be explicitly incorporated into the target observation model. In this paper by contrast, the emphasis is on a different application, namely, detection and tracking of small, quasipoint targets that are observed by remote sensors (usually mid-to high-altitude airborne platforms) and move in highly structured, generally smooth backgrounds (e.g., deserts, snow-covered fields, or other forms of terrain). Rather than modeling occlusion, our emphasis is instead on additive natural clutter.

Image Frame Model

Assuming a single target scenario, the  th frame in the image sequence is modeled as the

th frame in the image sequence is modeled as the  matrix:

matrix:

where the matrix  represents the background clutter and

represents the background clutter and  is a nonlinear function that maps the quantized target centroid position,

is a nonlinear function that maps the quantized target centroid position,  , (see (3)) into a spatial distribution of pixels centered at

, (see (3)) into a spatial distribution of pixels centered at  and specified by a set of deterministic and known target signature coefficients dependent on the aspect state

and specified by a set of deterministic and known target signature coefficients dependent on the aspect state  . Specifically, we make [4]

. Specifically, we make [4]

where  is an

is an  matrix whose entries are all equal to zero, except for the element

matrix whose entries are all equal to zero, except for the element  which is equal to 1.

which is equal to 1.

For a given fixed template model  , the coefficients

, the coefficients  in (8) are the target signature coefficients responding to that particular template. The signature coefficients are the product of a binary parameter

in (8) are the target signature coefficients responding to that particular template. The signature coefficients are the product of a binary parameter  , that defines the target shape for each aspect state, and a real coefficient

, that defines the target shape for each aspect state, and a real coefficient  , that specifies the pixel intensities of the target, again for the various states in the alphabet

, that specifies the pixel intensities of the target, again for the various states in the alphabet  . For simplicity, we assume that the pixel intensities and shapes are deterministic and known at each frame for each possible value of

. For simplicity, we assume that the pixel intensities and shapes are deterministic and known at each frame for each possible value of  . In particular, if

. In particular, if  takes the value

takes the value  denoting absence of target, then the function

denoting absence of target, then the function  in (7) reduces to the identically zero matrix, indicating that sensor observations consist of clutter only.

in (7) reduces to the identically zero matrix, indicating that sensor observations consist of clutter only.

Remark. Equation (8) assumes that the target's template is entirely located within the sensor image grid. Otherwise, for targets that are close to the image borders, the summation limits in (8) must be changed accordingly to take into account portions of the target that are no longer visible.

Clutter Model

In order to describe the spatial correlation of the background clutter, we assume that, after suitable preprocessing to remove the local means, the random field  ,

,  ,

,  , is modeled as a first-order noncausal Gauss-Markov random field (GMrf) described by the finite difference equation [9]

, is modeled as a first-order noncausal Gauss-Markov random field (GMrf) described by the finite difference equation [9]

where  , with

, with  if

if  and zero otherwise. The symbol

and zero otherwise. The symbol  denotes here the expectation (or expected value) of a random variable/vector.

denotes here the expectation (or expected value) of a random variable/vector.

Likelihood Function Model

Let  ,

,  , and

, and  be the one-dimensional equivalent representations, respectively, of

be the one-dimensional equivalent representations, respectively, of  ,

,  and

and  in (7), obtained by row-lexicographic ordering. Let also

in (7), obtained by row-lexicographic ordering. Let also  denote the covariance matrix associated with the random vector

denote the covariance matrix associated with the random vector  , assumed to have zero mean after appropriate preprocessing. For a GMrf model as in (9), the corresponding likelihood function for a fixed aspect state

, assumed to have zero mean after appropriate preprocessing. For a GMrf model as in (9), the corresponding likelihood function for a fixed aspect state  ,

,  , is given by [4]

, is given by [4]

where

is referred to in our work as the data term and

is called the energy term. On the other hand, for  ,

,  reduces to the likelihood of the absent target state, which corresponds to the probability density function of

reduces to the likelihood of the absent target state, which corresponds to the probability density function of  assuming that the observation consists of clutter only, that is,

assuming that the observation consists of clutter only, that is,

Writing the difference equation (9) in compact matrix notation, it can be shown [9–11] by the application of the principle of orthogonality that  has a block-tridiagonal structure of the form

has a block-tridiagonal structure of the form

where  denotes the Kronecker product,

denotes the Kronecker product,  is

is  identity matrix, and

identity matrix, and  is a

is a  matrix whose entries

matrix whose entries  if

if  and are equal to zero otherwise.

and are equal to zero otherwise.

Using the block-banded structure of  in (14), it can be further shown that

in (14), it can be further shown that  may be evaluated as the output of a modified 2D spatial matched filter using the expression

may be evaluated as the output of a modified 2D spatial matched filter using the expression

where  ,

,  , are obtained, from (3), and

, are obtained, from (3), and  is the output of a 2D differential operator

is the output of a 2D differential operator

with Dirichlet (identically zero) boundary conditions.

Similarly, the energy term  can be also efficiently computed by exploring the block-banded structure of

can be also efficiently computed by exploring the block-banded structure of  . The resulting expression is the difference between the autocorrelation of the signature coefficients

. The resulting expression is the difference between the autocorrelation of the signature coefficients  and their lag-one cross-correlations weighted by the respective GMrf model parameters

and their lag-one cross-correlations weighted by the respective GMrf model parameters  or

or  . Before we leave this section, we make two additional remarks.

. Before we leave this section, we make two additional remarks.

Remark 2.

As before, (15) is valid for  and

and  . For centroid positions close to the image borders, the summation limits in (15) must be varied accordingly (see [6] for details).

. For centroid positions close to the image borders, the summation limits in (15) must be varied accordingly (see [6] for details).

Remark 3.

Within our framework, a crude non-Bayesian single frame maximum likelihood target detector could be built by simply evaluating the likelihood map  for each aspect state

for each aspect state  and finding the maximum over the image grid of the sum of likelihood maps weighted by the a priori probability for each state

and finding the maximum over the image grid of the sum of likelihood maps weighted by the a priori probability for each state  (usually assumed to be identical). A target would be considered present then if the weighted likelihood peak exceeded a certain threshold. In that case, the likelihood peak would also provide an estimate for the target location. The integrated joint detector/tracker presented in Section 3 outperforms, however, the decoupled single-frame detector discussed in this remark by fully incorporating the dynamic motion and aspect motion into the detection process and enabling multiframe detection within the context of a track-before-detect philosophy.

(usually assumed to be identical). A target would be considered present then if the weighted likelihood peak exceeded a certain threshold. In that case, the likelihood peak would also provide an estimate for the target location. The integrated joint detector/tracker presented in Section 3 outperforms, however, the decoupled single-frame detector discussed in this remark by fully incorporating the dynamic motion and aspect motion into the detection process and enabling multiframe detection within the context of a track-before-detect philosophy.

3. Particle Filter Detector/Tracker

3.1. Sequential Importance Sampling

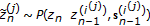

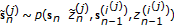

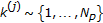

Given a sequence of observed frames  , our goal is to generate, at each instant

, our goal is to generate, at each instant  , a properly weighted set of samples (or particles)

, a properly weighted set of samples (or particles)  ,

,  , with associated weights

, with associated weights  such that, according to some statistical criterion, as

such that, according to some statistical criterion, as  goes to infinity,

goes to infinity,

A possible mixed-state sequential importance sampling (SIS) strategy (see [4, 13]) for the recursive generation of the particles  and their proper weights is described in the algorithm below.

and their proper weights is described in the algorithm below.

-

(1)

Initialization For

-

(i)

Draw

, and

, and  .

. -

(ii)

Make

and

and  .

.

-

(i)

-

(2)

Importance Sampling For

-

(i)

Draw

according to (2).

according to (2). -

(ii)

Draw

according to (4) or (5).

according to (4) or (5). -

(iii)

Update the importance weights

(18)

(18)

using the likelihood function in Section 2.2.

-

(i)

End-For

-

(i)

Normalize the weights

such that

such that  .

. -

(ii)

For

, make

, make  ,

,  , and

, and  .

. -

(iii)

Make

and go back to step 2.

and go back to step 2.

3.2. Resample-Move Filter

The sequential importance sampling algorithm in Section 3.1 is guaranteed to converge asymptotically with probability one; see [27]. However, due to the increase in the variance of the importance weights, the raw SIS algorithm suffers from the "particle degeneracy" phenomenon [8, 14, 17]; that is, after a few steps, only a small number of particles will have normalized weights close to one, whereas the majority of the particles will have negligible weight. As a result of particle degeneracy, the SIS algorithm is inefficient, requiring the use of a large number of particles to achieve adequate performance.

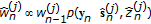

Resampling Step

A possible approach to mitigate degeneracy is [17] to resample from the existing particle population with replacement according to the particle weights. Formally, after the normalization of importance weights  , we draw

, we draw  with

with  , and build a new particle set

, and build a new particle set  ,

,  , such that

, such that

After the resampling step, the new selected trajectories

After the resampling step, the new selected trajectories

are approximately distributed (see, e.g., [28]) according to the mixed posterior pdf

are approximately distributed (see, e.g., [28]) according to the mixed posterior pdf  so that we can reset all particle weights to

so that we can reset all particle weights to  .

.

Move Step

Although particle resampling according to the weights reduces particle degeneracy, it also introduces an undesirable side effect, namely, loss of diversity in the particle population as the resampling processes generate multiple copies of a small number or, in the extreme case, only one high-weight particle. A possible solution, see [16], to restore sample diversity without altering the sample statistics is to move the current particles  to new locations

to new locations  using a Markov chain transition kernel

using a Markov chain transition kernel  , that is, invariant to the conditional mixture pdf

, that is, invariant to the conditional mixture pdf  . Provided that the invariance condition is satisfied, the new particle trajectories

. Provided that the invariance condition is satisfied, the new particle trajectories  remain distributed according to

remain distributed according to  and the associated particle weights may be kept equal to

and the associated particle weights may be kept equal to  . A Markov chain that satisfies the desired invariance condition can be built using the following Metropolis-Hastings strategy [15, 18].

. A Markov chain that satisfies the desired invariance condition can be built using the following Metropolis-Hastings strategy [15, 18].

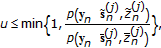

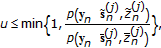

For  , the following algorithm holds.

, the following algorithm holds.

-

(i)

Draw

according to (2).

according to (2). -

(ii)

Draw

according to (4) or (5).

according to (4) or (5). -

(iii)

Draw

If

If  (19)

(19)then

(20)

(20)Else,

(21)

(21) -

(iv)

Reset

.

.

End-For.

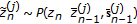

3.3. Auxiliary Particle Filter

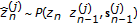

An alternative to the resample-move filter in Section 3.2 is to use the current observation  to preselect at instant

to preselect at instant  a set of particles that, when propagated to instant

a set of particles that, when propagated to instant  according to the system dynamics, is more likely to generate samples with high likelihood. That can be done using an auxiliary particle filter (APF) [19] which samples in two steps from a mixed importance function:

according to the system dynamics, is more likely to generate samples with high likelihood. That can be done using an auxiliary particle filter (APF) [19] which samples in two steps from a mixed importance function:

where  and

and  are drawn according to the mixed prior

are drawn according to the mixed prior  . The proposed algorithm is summarized into the following steps.

. The proposed algorithm is summarized into the following steps.

-

(1)

Pre-sampling Selection Step For

-

(i)

Draw

according to (2).

according to (2). -

(ii)

Draw

according to (4) or (5).

according to (4) or (5). -

(iii)

Compute the first-stage importance weights

(23)

(23)

using the likelihood function model in Section 2.2.

-

(i)

End-For

-

(2)

Importance Sampling with Auxiliary Particles For

-

(i)

Sample

with

with  .

. -

(ii)

Sample

according to (2).

according to (2). -

(iii)

Sample

according to (4) or (5).

according to (4) or (5). -

(iv)

Compute the second-stage importance weights

(24)

(24)End-For.

-

(v)

Normalize the weights

such that

such that  .

.

-

(i)

-

(3)

Post-sampling Selection Step For

-

(i)

Draw

with

with  .

. -

(ii)

Make

and

and  .

.End-For.

-

(iii)

Make

and go back to step 1.

and go back to step 1.

-

(i)

3.4. Multiframe Detector/Tracker

The final result at instant  of either the RS algorithm in Section 3.2 or the APF algorithm in Section 3.3 is a set of equally weighted samples

of either the RS algorithm in Section 3.2 or the APF algorithm in Section 3.3 is a set of equally weighted samples  that are approximately distributed according to the mixed posterior

that are approximately distributed according to the mixed posterior  . Next, let

. Next, let  denote the hypothesis that the target of interest is present in the scene at frame

denote the hypothesis that the target of interest is present in the scene at frame  . Conversely, let

. Conversely, let  denote the hypothesis that the target is absent. Given the equally weighted set

denote the hypothesis that the target is absent. Given the equally weighted set  , we compute then the Monte Carlo estimate,

, we compute then the Monte Carlo estimate,  , of the posterior probability of target absence by dividing the number of particles for which

, of the posterior probability of target absence by dividing the number of particles for which  by the total number of particles

by the total number of particles  . The minimum probability of error test to decide between hypotheses

. The minimum probability of error test to decide between hypotheses  and

and  at frame

at frame  is approximated then by the decision rule

is approximated then by the decision rule

or, equivalently,

Finally, if  is accepted, the estimate

is accepted, the estimate  of the target's kinematic state at instant

of the target's kinematic state at instant  is obtained from the Monte Carlo approximation of

is obtained from the Monte Carlo approximation of  , which is computed by averaging out the particles

, which is computed by averaging out the particles  such that

such that  .

.

4. Simulation Results

In this section, we quantify the performance of the proposed sequential Monte Carlo detector/tracker, both in the RS and APF configurations, using simulated infrared airborne radar (IRAR) data. The background clutter is simulated from real IRAR images from the MIT Lincoln Laboratory database, available at the CIS website, at Johns Hopkins University. An artificial target template representing a military vehicle is added to the simulated image sequence. The simulated target's centroid moves in the image from frame to frame according to the simple white-noise acceleration model in [3, 4] with parameters  and

and  second. A total of four rotated, scaled, or sheared versions of the reference template is used in the simulation.

second. A total of four rotated, scaled, or sheared versions of the reference template is used in the simulation.

The target's aspect changes from frame to frame following a known discrete-valued hidden Markov chain model where the probability of a transition to an adjacent aspect state is equal to 40%. In the notation of Section 2.1, that specification corresponds to setting  ,

,  ,

,  if

if  , and

, and  otherwise. All four templates are equally likely at frame zero, that is,

otherwise. All four templates are equally likely at frame zero, that is,  for

for  . The initial

. The initial  and

and  positions of the target's centroid at instant zero are assumed to be uniformly distributed, respectively, between pixels 50 and 70 in the

positions of the target's centroid at instant zero are assumed to be uniformly distributed, respectively, between pixels 50 and 70 in the  coordinate and pixels 10 and 20 in the

coordinate and pixels 10 and 20 in the  coordinate. The initial velocities

coordinate. The initial velocities  and

and  are in turn Gaussian-distributed with identical means (

are in turn Gaussian-distributed with identical means ( or

or  pixels/frame) and a small standard deviation (

pixels/frame) and a small standard deviation ( ).

).

Finally, the background clutter for the moving target sequence was simulated by adding a sequence of synthetic GMrf samples to a matrix of previously stored local means extracted from the database imagery. The GMrf samples were synthetized using correlation and prediction error variance parameters estimated from real data using the algorithms developed in [11, 12] see [4] for a detailed pseudocode.

Two video demonstrations of the operation of the proposed detector/tracker are available for visualization by clicking on the links in [29]. The first video (peak target-to-clutter ratio, or PTCR ≈ 10 dB) illustrates the performance over 50 frames of an 8 000-particle RS detector/tracker implemented as in Section 3.2, whereas the second video (PTCR ≈ 6.5 dB) demonstrates the operation over 60 frames of a 5 000-particle APF detector/tracker implemented as in Section 3.3. Both video sequences show a target of interest that is tracked inside the image grid until it disappears from the scene; the algorithm then detects that the target is absent and correctly indicates that no target is present. Next, once a new target enters the scene, that target is acquired and tracked accurately until, in the case of the APF demonstration, it leaves the scene and no target detection is once again correctly indicated.

Both video demos show the ability of the proposed algorithms to (1) detect and track a present target both inside the image grid and near its borders, (2) detect when a target leaves the image and indicate that there is no target present until a new target appears and (3), when a new target enters the scene, correctly detect that the target is present and track it accurately. In the sequel, for illustrative purposes only, we show in the paper the detection/tracking results for a few selected frames using the RS algorithm and a dataset that is different from the one shown in the video demos.

Figure 1(a) shows the initial frame of the sequence with the target centered in the (quantized) coordinates  and superimposed on clutter. The clutter-free target template, centered at the same pixel location, is shown as a binary image in Figure 1(b). The simulated PTCR in Figure 1(b) is 10.6 dB.

and superimposed on clutter. The clutter-free target template, centered at the same pixel location, is shown as a binary image in Figure 1(b). The simulated PTCR in Figure 1(b) is 10.6 dB.

Next, Figure 2(a) shows the tenth frame in the image sequence. Once again, we show in Figure 2(b) the corresponding clutter-free target image as a binary image. Note that the target from frame 1 has now undergone a random change in aspect in addition to translational motion.

The tracking results corresponding to frames 1 and 10 are shown, respectively, in Figures 3(a) and 3(b). The actual target positions are indicated by a cross sign ('+'), while the estimated positions are indicated by a circle ('o'). Note that the axes in Figures 1(a) and 1(b) and Figures 2(a) and 2(b) represent integer pixel locations, while the axes in Figures 3(a) and 3(b) represent real-valued  and

and  , coordinates assuming spatial resolutions of

, coordinates assuming spatial resolutions of  meters/pixel such that the

meters/pixel such that the  pixel range in the axes of Figures 1(a) and 1(b) and Figures 2(a) and 2(b) corresponds to a

pixel range in the axes of Figures 1(a) and 1(b) and Figures 2(a) and 2(b) corresponds to a  meter range in the axes of Figures 3(a) and 3(b).

meter range in the axes of Figures 3(a) and 3(b).

In this particular example, the target leaves the scene at frame 31 and no target reappears until frame 37. The SMC tracker accurately detects the instant when the target disappears and shows no false alarms over the 6 absent target frames as illustrated in Figures 4(a) and 4(b) where we show, respectively, the clutter+background-only thirty-sixth frame and the corresponding tracking results indicating in this case that no target has been detected. Finally, when a new target reappears, it is accurately acquired by the SMC algorithm. The final simulated frame with the new target at position  is shown for illustration purposes in Figure 5(a). Figure 5(b) shows the corresponding tracking results for the same frame.

is shown for illustration purposes in Figure 5(a). Figure 5(b) shows the corresponding tracking results for the same frame.

In order to obtain a quantitative assessment of tracking performance, we ran 100 independent Monte Carlo simulations using, respectively, the 5000-particle APF detector/tracker and the 8000-particle RS detector/tracker. Both algorithms correctly detected the presence of the target over a sequence of 20 simulated frames in all 100 Monte Carlo runs. However, with PTCR = 6.5 dB, the 5000-particle APF tracker diverged (i.e., failed to estimate the correct target trajectory) in 3 out of the 100 Monte Carlo trials, whereas the RS tracker diverged in 5 out of 100 runs. When we increased the PTCR to 8.1 dB, the divergence rates fell to 2 out of 100 for the APF, and 3 out of 100 for the RS filter. Figures 6(a) and 6(b) show, in the case of PTCR = 6.5 dB, the root mean square (RMS) error curves (in number of pixels) for the target's position estimates, respectively, in coordinates  and

and  generated by both the APF and the RS trackers. The RMS error curves in Figure 6 were computed from the estimation errors recorded in each of the 100 Monte Carlo trials, excluding the divergent realizations. Our simulation results suggest that, despite the reduction in the number of particles from 8000 to 5000, the APF tracker still outperforms the RS tracker, showing similar RMS error performance with a slightly lower divergence rate. For both filters, in the nondivergent realizations, the estimation error is higher in the initial frames and decreases over time as the target is acquired and new images are processed.

generated by both the APF and the RS trackers. The RMS error curves in Figure 6 were computed from the estimation errors recorded in each of the 100 Monte Carlo trials, excluding the divergent realizations. Our simulation results suggest that, despite the reduction in the number of particles from 8000 to 5000, the APF tracker still outperforms the RS tracker, showing similar RMS error performance with a slightly lower divergence rate. For both filters, in the nondivergent realizations, the estimation error is higher in the initial frames and decreases over time as the target is acquired and new images are processed.

5. Preliminary Discussion on Multitarget Tracking

We have considered so far a single target with uncertain aspect (e.g., random orientation or scale). In theory, however, the same modeling framework could be adapted to a scenario where we consider multiple targets with known (fixed) aspect. In that case, the discrete state  , rather than representing a possible target model, could denote instead a possible multitarget configuration hypothesis. For example, if we knew a priori that there is a maximum of

, rather than representing a possible target model, could denote instead a possible multitarget configuration hypothesis. For example, if we knew a priori that there is a maximum of  targets in the field of view of the sensor at each time instant, then

targets in the field of view of the sensor at each time instant, then  would take

would take  possible values corresponding to the hypotheses ranging from "no target present" to "all targets present" in the image frame at instant

possible values corresponding to the hypotheses ranging from "no target present" to "all targets present" in the image frame at instant  . The kinematic state

. The kinematic state  , on the other hand, would have variable dimension depending on the value assumed by

, on the other hand, would have variable dimension depending on the value assumed by  , as it would collect the centroid locations of all targets that are present in the image given a certain target configuration hypothesis. Different targets could be assumed to move independently of each other when present and to disappear only when they move out of the target grid as discussed in Section 2. Likewise, a change in target configuration hypotheses would result in new targets appearing in uniformly random locations as in (5).

, as it would collect the centroid locations of all targets that are present in the image given a certain target configuration hypothesis. Different targets could be assumed to move independently of each other when present and to disappear only when they move out of the target grid as discussed in Section 2. Likewise, a change in target configuration hypotheses would result in new targets appearing in uniformly random locations as in (5).

The main difficulty associated with the approach described in the previous paragraph is however that, as the number of targets increases, the corresponding growth in the dimension of the state space is likely to exacerbate particle depletion, thus causing the detection/tracking filters to diverge if the number of particles is kept constant. That may render the direct application of the joint detection/tracking algorithms in this paper unfeasible in a multitarget scenario. The basic tracking routines discussed in the paper may be still viable though when used in conjunction with more conventional algorithms for target detection/acquisition and data association. For a review of alternative approaches to multitarget tracking, mostly for video applications, we refer the reader to [30–33].

5.1. Likelihood Function Modification in a Multitarget Scenario

In the alternative scenario with multiple (at most  ) targets, where

) targets, where  represents one of

represents one of  possible target configurations, the likelihood function model in (10) depends instead on a sum of data terms

possible target configurations, the likelihood function model in (10) depends instead on a sum of data terms

and a sum of energy terms

where  is the long-vector representation of the clutter-free image of the

is the long-vector representation of the clutter-free image of the  th target under the target configuration hypothesis

th target under the target configuration hypothesis  , assumed to be identically zero for target configurations under which the

, assumed to be identically zero for target configurations under which the  th target is not present. The sum of the data terms corresponds to the sum of the outputs of different correlation filters matched to each of the

th target is not present. The sum of the data terms corresponds to the sum of the outputs of different correlation filters matched to each of the  possible (fixed) target templates taking into account the spatial correlation of the clutter background. The energy terms,

possible (fixed) target templates taking into account the spatial correlation of the clutter background. The energy terms,  , are on the other hand constant with

, are on the other hand constant with  for most possible locations of targets

for most possible locations of targets  and

and  on the image grid, except when either one of the two targets or both are close to the image borders. Finally, for

on the image grid, except when either one of the two targets or both are close to the image borders. Finally, for  , the energy terms are zero for present targets that are sufficiently apart from each other and, therefore, most of the time, they do not affect the computation of the likelihood function. The terms

, the energy terms are zero for present targets that are sufficiently apart from each other and, therefore, most of the time, they do not affect the computation of the likelihood function. The terms  must be taken into account, however, for overlapping targets; in this case, they may be computed efficiently exploring the sparse structure of

must be taken into account, however, for overlapping targets; in this case, they may be computed efficiently exploring the sparse structure of  and

and  . For details, we refer the reader to future work.

. For details, we refer the reader to future work.

5.2. Illustrative Example with Two Targets

We conclude this preliminary discussion on multitarget tracking with an illustrative example where we track two simulated targets moving on the same real clutter background from Section 4 for 22 consecutive frames. This example differs, however, from the simulations in Section 4 in the sense that, rather than performing joint detection and tracking of the two targets, the algorithm assumes a priori that two targets are always present in the scene and performs target tracking only. The two targets are preacquired (detected) in the initial frame such that their initial positions are known up only to a small uncertainty. For this particular simulation, with PTCR ≈ 12.5 dB, that preliminary acquisition was done by applying the differential filter in (16) to the initial frame, and then applying the output of the differential filter to a bank of two spatial matched filters as in (15), designed according to the signature coefficients, respectively, for targets 1 and 2. The outputs of the two matched filters minus the corresponding energy terms for targets 1 and 2, respectively, are finally added together and thresholded to provide the initial estimates of the location of the two targets. Note that the cross-energy terms discussed in Section 5.1 may be ignored in this case since we are assuming that the two targets are initially sufficiently far apart. Frames 1 and 10 of the simulated cluttered sequence with the two targets are shown in Figures 7(a) and 7(b) for illustration purposes.

Once the two targets are initially acquired, we track them jointly from the raw sensor images (i.e., without any other conventional decoupled data association/tracking method) using an auxiliary particle filter that assumes the modified likelihood function of Section 5.1 and two independent motion models, respectively, for targets 1 and 2. The tracking filter uses  particles. Figure 8 shows the actual and estimated trajectories, respectively, for targets 1 and 2. As we can see from the plots, the experimental results are worse than those obtained in the single target, multiaspect case, but the filter was still capable of tracking the pixel locations of the centroids of the two targets fairly accurately with errors ranging from zero to two or three pixels at most and without using any ad hoc data association routine.

particles. Figure 8 shows the actual and estimated trajectories, respectively, for targets 1 and 2. As we can see from the plots, the experimental results are worse than those obtained in the single target, multiaspect case, but the filter was still capable of tracking the pixel locations of the centroids of the two targets fairly accurately with errors ranging from zero to two or three pixels at most and without using any ad hoc data association routine.

6. Conclusions and Future Work

We discussed in this paper a methodology for joint detection and tracking of multiaspect targets in remote sensing image sequences using sequential Monte Carlo (SMC) filters. The proposed algorithm enables integrated, multiframe target detection and tracking incorporating the statistical models for target motion, target aspect, and spatial correlation of the background clutter. Due to the nature of the application, the emphasis is on detecting and tracking small, remote targets under additive clutter, as opposed to tracking nearby objects possibly subject to occlusion.

Two different implementations of the SMC detector/tracker were presented using, respectively, a resample-move (RS) particle filter and an auxiliary particle filter (APF). Simulation results show that, in scenarios with heavily obscured targets, the APF and RS configurations have similar tracking performance, but the APF algorithm has a slightly smaller percentage of divergent realizations. Both filters, on the other hand, were capable of correctly detecting the target in each frame, including accurately declaring absence of target when the target left the scene and, conversely, detecting a new target when it entered the image grid. The multiframe track-before-detect approach allowed for efficient detection of dim targets that may be near invisible in a single-frame but become detectable when seen across multiple frames.

The discussion in this paper was restricted to targets that assume only a finite number of possible aspect states defined on a library of target templates. As an alternative for future work, an appearance model similar to the one described in [24] could be used instead, allowing the discrete-valued aspect states  to denote different classes of continuous-valued target deformation models, as opposed to fixed target templates. Similarly, the framework in this paper could also be modified to allow for multiobject tracking as indicated in Section 5.

to denote different classes of continuous-valued target deformation models, as opposed to fixed target templates. Similarly, the framework in this paper could also be modified to allow for multiobject tracking as indicated in Section 5.

References

Doucet A, Godsill S, Andrieu C: On sequential Monte Carlo sampling methods for Bayesian filtering. Statistics and Computing 2000, 10(3):197-208. 10.1023/A:1008935410038

Bounds JK: The Infrared airborne radar sensor suite. In RLE Tech. Rep. 610. Massachusetts Institute of Technology, Cambridge, Mass, USA; 1996.

Bar-Shalom Y, Li X: Multitarget-Multisensor Tracking: Principles and Techniques. YBS Publishing, Storrs, Conn, USA; 1995.

Bruno MGS: Bayesian methods for multiaspect target tracking in image sequences. IEEE Transactions on Signal Processing 2004, 52(7):1848-1861. 10.1109/TSP.2004.828903

Bruno MGS, Moura JMF: Optimal multiframe detection and tracking in digital image sequences. Proceedings of the IEEE International Acoustics, Speech, and Signal Processing (ICASSP '00), June 2000, Istanbul, Turkey 5: 3192-3195.

Bruno MGS, Moura JMF: Multiframe detector/tracker: optimal performance. IEEE Transactions on Aerospace and Electronic Systems 2001, 37(3):925-945. 10.1109/7.953247

Bruno MGS, Moura JMF: Multiframe Bayesian tracking of cluttered targets with random motion. Proceedings of the International Conference on Image Processing (ICIP '00), September 2000, Vancouver, BC, Canada 3: 90-93.

Arulampalam MS, Maskell S, Gordon N, Clapp T: A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Transactions on Signal Processing 2002, 50(2):174-188. 10.1109/78.978374

Moura JMF, Balram : Recursive structure of noncausal Gauss-Markov random fields. IEEE Transactions on Information Theory 1992, 38(2):334-354. 10.1109/18.119691

Moura JMF, Bruno MGS: DCT/DST and Gauss-Markov fields: conditions for equivalence. IEEE Transactions on Signal Processing 1998, 46(9):2571-2574. 10.1109/78.709549

Moura JMF, Balram N: Noncausal Gauss Markov random fields: parameter structure and estimation. IEEE Transactions on Information Theory 1993, 39(4):1333-1355. 10.1109/18.243450

Schweizer SM, Moura JMF: Hyperspectral imagery: clutter adaptation in anomaly detection. IEEE Transactions on Information Theory 2000, 46(5):1855-1871. 10.1109/18.857796

Isard , Blake : A mixed-state condensation tracker with automatic model-switching. Proceedings of the 6th International Conference on Computer Vision, January 1998, Bombay, India 107-112.

Doucet A, Freitas JFG, Gordon N: An introduction to sequential Monte Carlo methods. In Sequential Monte Carlo Methods in Practice. Edited by: Doucet A, Freitas NFG, Gordon NJ. Springer, New York, NY, USA; 2001.

Bruno MGS, de Araújo RV, Pavlov AG: Sequential Monte Carlo filtering for multi-aspect detection/tracking. Proceedings of the IEEE Aerospace Conference, March 2005, Big Sky, Mont, USA 2092-2100.

Gilks WR, Berzuini C: Following a moving target—Monte Carlo inference for dynamic Bayesian models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2001, 63(1):127-146. 10.1111/1467-9868.00280

Gordon N, Salmond D, Ewing C: Bayesian state estimation for tracking and guidance using the bootstrap filter. Journal of Guidance, Control, and Dynamics 1995, 18(6):1434-1443. 10.2514/3.21565

Robert CP, Casella G: Monte Carlo Statistical Methods, Springer Texts in Statistics. Springer, New York, NY, USA; 1999.

Pitt MK, Shephard N: Filtering via simulation: auxiliary particle filters. Journal of the American Statistical Association 1999, 94(446):590-599. 10.2307/2670179

Isard M, Blake A: Condensation—conditional density propagation for visual tracking. International Journal of Computer Vision 1998, 29(1):5-28. 10.1023/A:1008078328650

Li B, Chellappa R: A generic approach to simultaneous tracking and verification in video. IEEE Transactions on Image Processing 2002, 11(5):530-544. 10.1109/TIP.2002.1006400

Zhou SK, Chellappa R, Moghaddam B: Visual tracking and recognition using appearance-adaptive models in particle filters. IEEE Transactions on Image Processing 2004, 13(11):1491-1506. 10.1109/TIP.2004.836152

Dempster AP, Laird NM, Rubin DB: Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1977, 39(1):1-38.

Giebel J, Gavrila DM, Schnörr C: A bayesian framework for multi-cue 3D object tracking. Proceedings of the 8th European Conference on Computer Vision (ECCV '04), May 2004, Prague, Czech Republic 4: 241-252.

Vermaak J, Doucet A, Pérez P: Maintaining multi-modality through mixture tracking. Proceedings of the 9th IEEE International Conference on Computer Vision (ICCV '03), October 2003, Nice, France 2: 1110-1116.

Okuma K, Taleghani A, de Freitas N, Little JJ, Lowe DG: A boosted particle filter: multitarget detection and tracking. Proceedings of the 8th European Conference on Computer Vision (ECCV '04), May 2004, Prague, Czech Republic 3021: 28-39.

Geweke J: Bayesian inference in econometric models using Monte Carlo integration. Econometrica 1989, 57(6):1317-1339. 10.2307/1913710

Liu JS, Chen R, Logvinenko T: A theoretical framework for sequential importance sampling with resampling. In Sequential Monte Carlo Methods in Practice. Edited by: Doucet A, Freitas JFG, Gordon NJ. Springer, New York, NY, USA; 2001:225-246.

Video Demonstration 1 & 2 http://www.ele.ita.br/~bruno

Ng W, Li J, Godsill S, Vermaak J: Tracking variable number of targets using sequential Monte Carlo methods. Proceedings of the IEEE/SP 13th Workshop on Statistical Signal Processing, July 2005, Bordeaux, France 1286-1291.

Ng W, Li J, Godsill S, Vermaak J: Multitarget tracking using a new soft-gating approach and sequential Monte Carlo methods. Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '05), March 2005, Philadelphia, Pa, USA 4: 1049-1052.

Hue C, Le Cadre J-P, Pérez P: Tracking multiple objects with particle filtering. IEEE Transactions on Aerospace and Electronic Systems 2002, 38(3):791-812. 10.1109/TAES.2002.1039400

Czyz J, Ristic B, Macq B: A color-based particle filter for joint detection and tracking of multiple objects. Proceedingd of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '05), March 2005, Philadelphia, Pa, USA 217-220.

Acknowledgment

Part of the material in this paper was presented at the 2005 IEEE Aerospace Conference.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Bruno, M.G.S., Araújo, R.V. & Pavlov, A.G. Sequential Monte Carlo Methods for Joint Detection and Tracking of Multiaspect Targets in Infrared Radar Images. EURASIP J. Adv. Signal Process. 2008, 217373 (2007). https://doi.org/10.1155/2008/217373

Received:

Accepted:

Published:

DOI: https://doi.org/10.1155/2008/217373

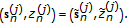

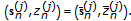

, and

, and  .

.  and

and  .

.

according to (2).

according to (2). according to (4) or (5).

according to (4) or (5).

such that

such that  .

. , make

, make  ,

,  , and

, and  .

. and go back to step 2.

and go back to step 2. according to (2).

according to (2). according to (4) or (5).

according to (4) or (5). If

If

.

.

according to (2).

according to (2). according to (4) or (5).

according to (4) or (5).

with

with  .

. according to (2).

according to (2). according to (4) or (5).

according to (4) or (5).

such that

such that  .

.

with

with  .

.

and

and  .

. and go back to step 1.

and go back to step 1.

coordinate, (b)

coordinate, (b)  coordinate.

coordinate.