- Research

- Open access

- Published:

Hierarchical CoSaMP for compressively sampled sparse signals with nested structure

EURASIP Journal on Advances in Signal Processing volume 2014, Article number: 80 (2014)

Abstract

This paper presents a novel procedure, named Hierarchical Compressive Sampling Matching Pursuit (CoSaMP), for reconstruction of compressively sampled sparse signals whose coefficients are organized according to a nested structure. The Hierarchical CoSaMP is inspired by the CoSaMP algorithm, and it is based on a suitable hierarchical extension of the support over which the compressively sampled signal is reconstructed. We analytically demonstrate the convergence of the Hierarchical CoSaMP and show by numerical simulations that the Hierarchical CoSaMP outperforms state-of-the-art algorithms in terms of accuracy for a given number of measurements at a restrained computational complexity.

1 Introduction

The burgeoning field of compressive sampling (CS) addresses the recovery of signals which are sparse either in the original domain or in a different representation domain achieved by a suitable invertible transform. The CS theory establishes conditions for sparse signals recovering from measurements acquired without satisfying the Nyquist criterion, provided that suitable relations between the number of measurements and the signal sparsity are satisfied. CS studies encompass different issues, ranging from measurement acquisition via random projections to signal recovery algorithms; besides, CS reconstruction algorithms possibly leverage specific signals underlying structure. The reconstruction algorithm Compressive Sampling Matching Pursuit (CoSaMP) by Needell and Tropp [1] represents a starting point in the definition of reconstruction procedures. The reason is manifold. Firstly, its solid analytical derivation is viable of different extensions accounting for peculiar signal structures [2]. Secondly, its iterative structure may be extended to encompass prior knowledge on the signal to be reconstructed [3]. Besides, the CoSaMP gracefully degrades with noise, since the reconstruction error is bounded by a value proportional to the energy of the noise vector, for a sufficiently large number of measurements.

In principle, the minimal number of measurements for the CoSaMP algorithm to converge on sparse noise-free signal is the least required by CS theory [1]. Still, in [4], it is observed that procedures exploiting the sparse signal structure can converge with a number of measurements of the order of the signal sparsity [5], whereas numerical examples show that the CoSaMP may require a number of measurements about four times larger than the signal sparsity. Thereby, it is argued that in specific applications, the number of measurements may be in principle sufficient to recover the signal under concern, exactly or in an approximate form, while still not being enough for the CoSaMP to converge. This limits the accuracy and applicability of CoSaMP in a variety of resource-limited applications, such as CS in sensor networks [6].

Several studies have been so far proposed to overcome the gap between the minimum number of measurements as predicted by CS theory and those required by the CoSaMP to converge. A fundamental study by Baraniuk et al. [2] proposes to exploit knowledge about peculiar structures exhibited by the sparse signal. Specifically, the authors focus on the reconstruction of K-compressible signals, e.g. signals that are approximately reconstructed by K coefficients, and show that if the signal presents a suitable structure, the reconstruction procedure can exploit this knowledge to constrain the recovered signal subspace and improve the accuracy for a given number of measurements.

The analysis in [2] introduces the concept of nested approximation property of a signal sparsity model to derive theoretical bounds for CS reconstruction. Further, a priori constraints on the structure of the compressible signal, e.g. the organization in blocks or in a tree structure, are then invoked to take advantage of the signal sparsity model and reduce the solution space of the recovery algorithm.

Herein, in the light of the work in [2], we concern ourselves with signals whose coefficients are organized according to a nested structure. For such signals, we introduce a modified version of the CoSaMP algorithm, referred to as Hierarchical CoSaMP (HCoSaMP), that exploits the underlying assumption that the signal is hierarchically structured. Specifically, in HCoSaMP, the estimated support is progressively extended from a hierarchical layer to another throughout different estimation stages. Different from the analysis in [2], only the assumption on the nested signal structure is needed to formally state the convergence of the HCoSaMP. Thereby, the herein presented analysis imposes mild assumptions on the signals, and it applies in general cases where structured sparsity cannot be claimed. Besides, we provide application examples on images obtained by oceanographic monitoring [6, 7] and natural images [8], as well as on texture images [9]. For the former cases, we select the well-known discrete wavelet transform as a sparsifying transform, whereas for the latter, we resort to the graph-based transform, originally established for depth map encoding, as a sparsity-achieving representation within the reconstruction procedure. In both cases, we show that our procedure outperforms state-of-the-art reconstruction algorithms and proves extending the feasibility of the reconstruction in the presence of a reduced number of measurements.

The structure of the paper is as follows. In Section 2, we recall the CS basics, while in Section 3, we discuss the CS of a sparse signal with nested structure. In Section 4, we describe the HCoSaMP and outline the demonstration of its convergence, and in Section 5, we report the numerical simulation results. Finally, Section 6 concludes the paper.

2 Compressive sampling basics

Let us consider an image x[n1,n2], and let us denote by x the N×1 vector built by collecting its samples in lexicographic order; besides, let us assume that x is K-sparse. Let y denote the M×1 vector of CS measurements, given by

where is a suitable M×N random sensing matrix, and n is the M×1 acquisition noise. For perfect reconstruction of x given y, the sensing matrix is supposed to satisfy the restricted isometry property (RIP) [10]:

It can be proved that a matrix with i.i.d. random entries drawn from a Gaussian distribution with zero mean and variance 1/M satisfies the RIP with high probability [11, 12] provided that

Similar results have been derived for different classes of random sensing matrices. The relation (3) binds the signal sparsity K and the minimal number of measurement M K for the matrix to obey the RIP with a given RIP constant value δ K ; conversely, for a K-sparse signal, the RIP constant value δ K with which a selected matrix satisfies the RIP depends on the available number of measurements M (see [13] for a detailed discussion), and being fixed the value of M, the value of δ k increases with K.

Often, the signal is assumed to be sparse under a sparsifying transformation identified by a transform basis matrix Ψ. Then, x is expressed as x=Ψ α where the vector α collects the transform coefficients and it is defined on a set of cardinality . With these positions, and because of the decomposition in (5), we can rewrite the acquisition process in (1) via a sensing matrix as follows:

In the following, we refer to a K-sparse signal such that only K out of its N transform coefficients are non-zero valued; besides, we denote by Ω the support of the K non-zero coefficients of α, satisfying and having cardinality |Ω|=K<N.

3 CS of a sparse signal with nested structure

Let us consider a group of L+1 subsets of the overall set, such that . We also consider a partition of the support Ω of the non-zero terms of α into a finite number of sets Ω l ,l=0,…,L of cardinality K l =∥Ω l ∥,l=0,…,L, defined as follows:

The sets Ω i ’s are disjoint, i.e. Ω i ∩Ω j =⊘,j≠i and the union of the Ω i ’s up to the L-th, is .

The above partition is found, for instance, in the L decomposition levels of the wavelet transform of a natural image, where the set can be associated to the indexes of the scaling coefficients, and the sets can be formed by progressively including the indexes corresponding to the increasing wavelet decomposition levels. In this example, the set is built by the indexes of the non-zero scaling coefficients, and the sets Ω l ,l=1,…,L correspond to the incremental supports of the non-zero coefficients found in each of the ’s. Although the most familiar, the wavelet domain is not the sole one in which a hierarchical organization of the transform coefficients is observed. In Section 5, we show with the help of numerical examples that the graph-based transform (GBT) transform of texture images reveals a hierarchical structure, too.

The signal x satisfies the nested approximation property (NAP) if the support of the best (in the least squares sense) K-term approximation in includes the support of the best K′-term approximation in for all K≥K′, and for i=1,…L. This property, referred to as the NAP, is invoked in [2] on structured sparse signal models to derive a tight bound on the number of CS measurements required for signal reconstruction. The therein presented recovery algorithm exploits a priori knowledge on the structured nature of the signal. Herein, we drop further hypotheses on the signal structure, and we elaborate on the nestedness of the signal support.

Let us then consider a signal x represented by a sparsifying transform Ψ, and let us assume that it satisfies the NAP in the transform domain. We rewrite the vector α as , where denotes a vector whose entries coincide with α for indexes in Ω l and are zero otherwise. Each subset of the elements of α has sparsity K l , and the union of the Ω i ’s up to the l-th, namely , of cardinality , yields the best K l -term approximation of the signal itself.

The vector x can then be expressed as the sum of the contributions due to the different transform domain layers

where denotes the restriction of Ψ to the column indices of Ψ pertaining to different disjoint sets Ω l .

Because of the decomposition in (5), we can rewrite the acquisition process in (4) as follows:

For simplicity sake, and without loss of generality, let us first refer to a nested decomposition encompassing only two layers, i.e. l={0,1}. Under this position, we can rewrite (6) as

The formulation in (7) gives a simple and yet interesting insight on the CS acquisition process. To elaborate, the M measurements collected in (7) can be interpreted as either (i) the acquisition of the K-sparse vector α with a measurement noise n of energy ∥n∥2 or (ii) the acquisition of the K0-sparse vector with a measurement noise e0 of energy ∥e0∥2, suitably boundeda because of the RIP of the matrix Φ.

4 Hierarchical CoSaMP

Here, we propose a modified version of the CoSaMP procedure for reconstructing sparse signals exhibiting the above introduced nested structure. In short, we show that a signal endowed by such nested hierarchical structure can be reconstructed by recursive application of the core stage of the CoSaMP algorithm on progressively expanded supports. Furthermore, we show that the resulting procedure, which we refer to as Hierarchical CoSaMP (HCoSaMP), requires a lower number of measurements than the original CoSaMP to incrementally reconstruct the signal up to its best K-term approximation.

Before turning to mathematics, we make two observations. Firstly, the reformulation (7) of the relation in (6) hints to recover the coefficients of starting from the measurements y, instead of trying to recover the whole signal α. The requirement on the number of measurements for the CoSaMP convergence in reconstructing the K0 samples of is indeed looser than that for reconstruction of the K samples of α Ω . Specifically, the number M must satisfy the RIP for but not for δ4K[1]. If the RIP for is satisfied, the samples can be recovered by the CoSaMP algorithm [2] and numerical bounds relating the estimation error to the signal energy out of Ω0 are provided.

Specifically, in [1], the accuracy achieved at convergence by the CoSaMP algorithm is characterized by the following mean square error bound:

From (8), we recognize that the performance of such CoSaMP-based stage degrades gracefully as the energy out of Ω0 increases, just as it occurs when CoSaMP recovers noisy signals or compressible signals.

Secondly, let us assume to have recovered, to a certain degree of accuracy, the samples starting from the measurements collected as in (7), so as to have the best K0-term approximation of the sparse signal α. Then, this coarse estimate can be adopted as a better initialization of a CoSaMP procedure, in order to recover the remaining K1 − K0 coefficients providing the best K1-term approximation of the α itself.

Stemming on these observations, it is fair to ask if the recovery of the signal on the l-th support can exploit the partial knowledge of the signal on the supports up to the (l−1)-th one. In the following subsection, we prove that if the signal under concern satisfies the NAP property, it is indeed possible to recover all the coefficients α by (i) first recovering the coefficients and then (ii) recursively injecting the coefficients recovered on the support Ωl−1 as initialization for recovering the coefficients on the support Ω l .

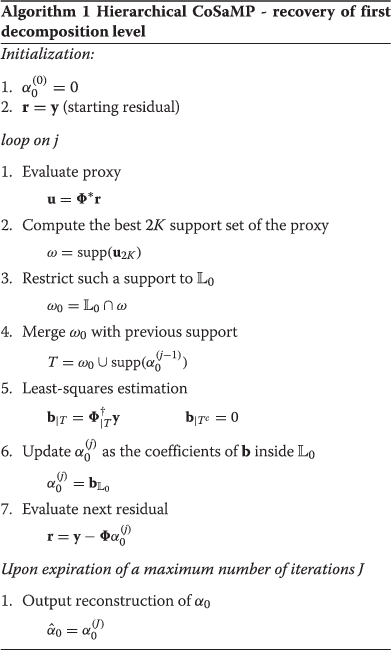

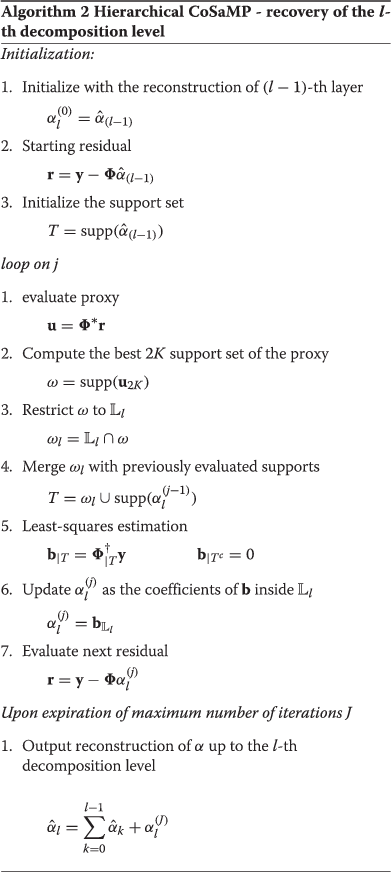

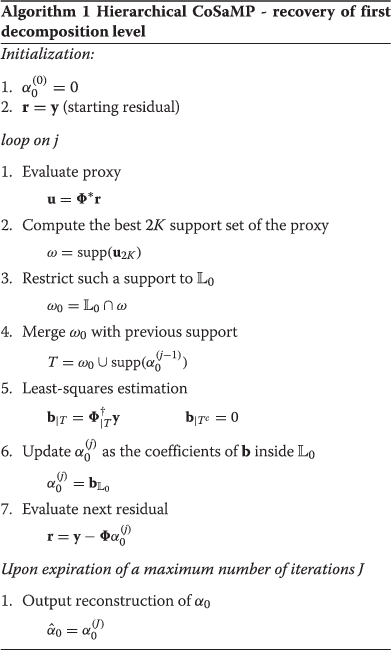

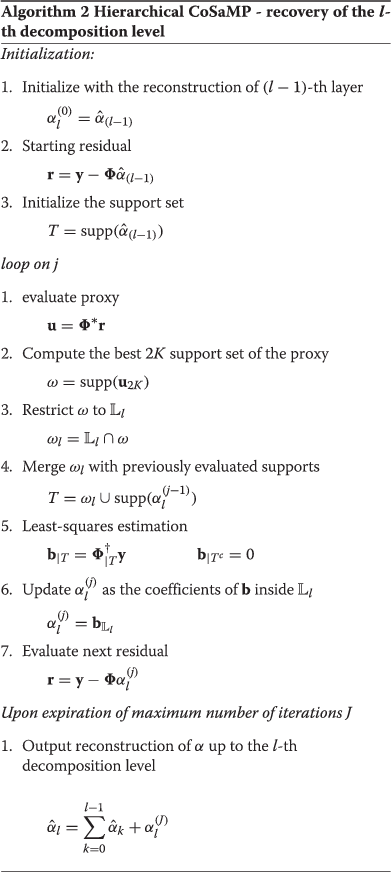

The recovery stages (i) and (ii) are respectively realized according to Algorithms 1 and 2, where we denote by Φ† the pseudo-inverse of Φ, i.e. (Φ∗Φ)−1Φ∗; by x N the restriction of x to its N largest-magnitude components; by x|T (A|T) the restriction of x (A) to the elements (columns) of indices in the set T; and by |T| the cardinality of the set T.

To sum up, the outline of the HCoSaMP is as follows:

-

(a)

Recovery of the first decomposition layer. The outline of this partial estimation stage appears in Algorithm 1; this stage encompasses all the steps of the CoSaMP, plus an additional step (namely step 3 in Algorithm 1, which tailors the support estimated at each iteration to the predefined support .

-

(b)

Recovery of the l-th decomposition layer. The outline of the estimation stage providing the reconstruction of the signal up to the support Ω l given its previous estimate up to the support Ωl−1 appears in Algorithm 2. We recognize that this stage still reproduces the steps encompassed by the CoSaMP from which it differs because it is initialized with the reconstructed version of and it limits the estimate to the support .

In the following subsection, we formally prove the convergence of the HCoSaMP algorithm on NAP signals.

4.1 HCoSaMP convergence on a sparse signal with nested structure

The convergence theorem is presented in two parts, respectively establishing the convergence in Algorithm 1 (Part I) and in Algorithm 2 (Part II).

Theorem 4.1 (Part I: Convergence of Algorithm 1)

Let us consider with , and a set of M CS noisy measurements y obtained as y=Φ α0+e0 according to (7). If Φ exhibits a RIP constant for the value of M at hand, then it can be proved that the mean square error on the estimate obtained at the j-th iteration of the HCoSaMP algorithm can be upper bounded as follows:

Proof

(Proof of Theorem 4.1, Part I) To prove the convergence of Algorithm 1, we follow the guidelines in [1], by adapting them to the nested signal structure invoked by the Hierarchical CoSaMP algorithm. Specifically, we show that the reconstruction error at the j-th iteration of the algorithm

is upper bounded by a term that, in the case of noise-free acquisition, decays asymptotically to zero. Towards this aim, we prove a series of inequalities providing the desired result.

At each iteration of the algorithm, the reconstructed signal is selected as the restriction of the least squares estimate b over the support . Based on such a restriction, considering that α0 lives, by definition, over the support , we can write

The following Lemma 4.1 provides an upper bound over the right-hand side of (11), representing the energy of the error of the least squares estimation in Algorithm 1.

Lemma 4.1

Let us consider the set T estimated as in Algorithm 1, and let b represent the LS estimate of the signal samples evaluated on the support T:

The energy of the estimation error is upper bounded by a term proportional to the energy of the signal α0 over the support TC and by a term proportional to the measurement noise energy:

The proof of Lemma 4.1 is found in Appendix 1.

We recognize that the term in (13) represents the energy of the original signal outside the set T. Since has no energy over TC, we can write

In turn, the energy of the error is upper bounded by the energy of the signal to be recovered at iteration j over the set ω0C, that is with the energy

In order to upper bound this latter term, we resort to the following Lemma 4.2, whose proof can be found in Appendix 2.

Lemma 4.2

Let α be a K-sparse signal with support and α0 be the portion of α confined to the support , of cardinality . Under these settings, the estimate of the vector α0 obtained after the j-th iteration of the HCoSaMP algorithm, namely , is the same as that obtained by application of the CoSaMP, with the following positions:

where the term e0 encompasses not only acquisition noise but also approximation error on the support . It can then be proved that:

The result expressed by (17) shows that when the algorithm selects the support ω0 from the proxy at iteration j, it is able to identify most of the energy of the portion of the signal that still has to be recovered at iteration j.

Injecting (14), (15) and (17) in (13), we finally come up with the desired upper bound to the reconstruction error energy

By setting, as in [1, 2], , we can rewrite (18) as

which, solving the recursion, resolves in the expression in (9)

Having proved the convergence of Algorithm 1, we proceed to discuss the convergence of Algorithm 2, devoted to the reconstruction of the i-th decomposition layer.

Theorem 4.1 (Part II: Convergence of Algorithm 2)

Let us assume to have recovered an estimate of the original signal α(i−1). Then, it can be proved that the signal with can be recovered at the j-th iteration of the HCoSaMP algorithm with a mean squared error bounded by

provided that the sensing matrix exhibits a RIP constant .

Proof.

(Proof of Theorem 4.1, Part II) We restrict ourselves, for the sake of concreteness, to the case of a two-layer decomposition, i.e. α=α0+α1. The extension to the case of multiple decomposition layers is straightforward.

Let us then assume that the signal α0 has been correctly recovered by running Algorithm 1; upon convergence, the reconstructed signal can be written as

where the noise term n0 accounts for both convergence inaccuracies due to a finite number of iterations and the error floor due to the presence of the acquisition noise. Algorithm 2 aims at recovering the signal α1. The algorithm starts by initializing the output at the first iteration with the reconstructed version of α0, that is

Under this setting, the starting residual rewrites as follows:

where we have compactly denoted e1=n−Φ n0. The relation in (22) shows that the selected initialization makes Algorithm 2 equivalent to the execution of Algorithm 1 aimed at recovering α1 with a null initialization. Hence, following the steps in the proof of Part I,

we obtain

where the set T now assumes a cardinality .

Let us now rephrase the positions in (16) as follows:

In this case, s is confined to so that its sparsity is bounded by . Then, stemming from the NAP property we can write

Then, following the same derivations driving to (17), we come up with the following inequality

With this rephrasing, the overall convergence of Algorithm 2 is proved according to the derivations already exposed in Part I.

From the computational complexity point of view, with respect to the original CoSaMP and to its adaptation to the structured signal in [2], the HCoSaMP just exploits the layered structure of the image transform and requires L+1 applications of the basic stage; the latter in turn retains the computational complexity of the CoSaMP.

4.2 Related works

With respect to [2], the HCoSaMP poses milder assumptions since it does not invoke any constraint on the structure (e.g. tree-based, block-based) of the sparse signal. Still, as shown in the next section, the HCoSaMP achieves reconstruction accuracy performances comparable to that achieved by CS recovery with a larger number of measurements. In a nutshell, the reason is to be found in that the hierarchical approach allows each stage of the reconstruction algorithm to separately recover signal components on supports Ω l whose cardinality K l is smaller than that of the signal, i.e. K l <K; the increased ratio between the number of measurements M and the sparsity K l of the signal to be actually recovered definitely improves the convergence conditions. This capability is a merit of the HCoSaMP that leverages the nested signal structure that can be found in different domains depending on the application under concern.

The problem of the convergence of greedy algorithms on different subsets of the sparsity domain is debated in [14], where the authors focus on the CS recovery algorithm stability. The algorithm stability is said to be locally nested if the algorithm convergence on an outer set implies the convergence in all the inner subsets. In [14], it is shown that this property does not pertain to several greedy algorithms for CS recovery. Thereby, in the absence of assumptions on the nested structure of the signal, the algorithm convergence does not propagate from the more comprehensive set to the inner subsets. In our analysis, we instead prove the convergence on the entire domain by proving the convergence on a selection of hierarchically organized nested subsets, starting from the inner one up to the outer one.

A relevant question naturally arises about how to select the nested subsets. The first step is indeed the identification of a domain in which the signal is either sparse or compressible. Once the sparsity domain is identified, the second step is in partitioning the coefficients into nested subsets; in the sparsity domain, the support nesting often naturally arises when the signal energy is much more concentrated in lower frequency subsets and it decreases towards higher frequency subsets. This is the case of natural images, which typically are sparse and nested in the discrete wavelet transform (DWT) domain. A second example is found in video compressive sensing, where nested approximation may be invoked when the video data is suitably transformed in a 3D discrete cosine transform domain [15]. In the following, we show with the help of numerical examples that also texture images verify the NAP in the graph-based transform (GBT) [16] domain. Once a layered support structure allows to invoke the NAP on the signal under concern, the size of each set Ω l ,l = 0,…L−1 shall be assigned according to a fundamental trade-off: smaller cardinality sets have looser CS measurement requirements but may result into slower convergence characteristics in case of high out-of-band energy. Henceforth, the choice of the subset cardinality depends on application-related issues, such as the acquisition noise level or the cost of the measurement acquisition stage.

Moreover, in general, the acquisition phase itself could in principle be designed so as to reduce the approximation error on the inner nested subsets, by organizing the sensing matrix so as to relate subsets of the measurements to subsets of the signal sparsity domain, much in the same way as block diagonal matrices [17, 18]; further analysis is needed to investigate on the RIP of a CS matrix inducing a nested structure on the acquired CS measurements.

Finally, the herein presented analysis draws a path for hierarchical solution of different recovery algorithms, such as the total variation minimization, which have been proved effective in video CS applications [15]. Recent literature results [19] have shown that the total variation (TV) minimization algorithm is guaranteed to converge also in the presence of acquisition noise. This paves the way to hierarchical application of the TV algorithm to progressively extended nested sets, on which the approximation error plays just the same role as the acquisition noise. The extension of the hierarchical approach to TV minimization is left for further study.

5 Numerical simulation

We now present numerical results assessing the performance of HCoSaMP in reconstructing signals characterized by the NAP; we both investigate the case of signals compressible in the DWT domain and the interesting case of signals compressible in the so-called graph-based transform domain, among which the texture images stand as an example of paramount relevance.

5.1 Compressible signals in the DWT domain

Here, we show how the HCoSaMP can be employed to obtain a high reconstruction quality from a reduced number of compressive measurements of signals compressible in the DWT domain. Among such signals, both natural images or spatially localized signals stand as interesting cases. The class of spatially localized images suitably represents the physical fields measured by wireless sensor networks devoted to environmental monitoring such as temperature measurements for anomalous event detection or underwater current field estimation [6, 7]. In all of these cases, the sensed field exhibits a peculiar structure given by one or more peaks at levels relatively larger than the field mean values: an example of spatially localized signal is the one provided in Figure 1A, representing the ‘Zonal Current’ data, sensed at Monterey Bay on 10 October 2012 (data available in [20]).

We have tested the HCoSaMP on the details shown in Figure 1A,B representing respectively a cropped 64×64 pixel fragment of the Zonal Current field and of the test image Peppers, i.e. with N=4,096 samples. We form the compressive sensing measurements via a full Gaussian matrix Φ with M=900 or M=1,500 measurements, depending on the experiment at hand.

We have run the HCoSaMP algorithm considering the hierarchical recovering over five supports corresponding to the DWT decomposition levels. We remark that this is not the only possible choice for the ’s, other options being possible provided that the signal to be recovered satisfies the NAP within the progression of sets .

We start by presenting results concerning the spatially localized image in Figure 1A; in this experiment, we have acquired M=900 measurements and we have assumed K=256. In Figure 2, we show the mean squared error (MSE) on the reconstructed image obtained by the HCoSaMP at the different iterations. The hierarchical approach of the HCoSaMP is well recognized in the plot of Figure 2, where the stepwise pattern of the MSE is due to the partial recovery of the DWT coefficients over the increasing supports . The convergence on the different layers can be clearly identified, as well as the floor achieved on each layer. To provide a comparison, we have plotted also the MSE obtained by the classical CoSaMP, detailed in [1]. The hierarchical structure of the HCoSaMP exploits the limited number of measurements by separately reconstructing the different layers, and it definitely outperforms the error floor of the classical reconstruction.

To visually confirm these results, we show in Figure 3 the reconstructed image obtained at convergence. For the sake of comparison, we report also the reconstruction results obtained by the model-based compressive sensing, described in [2], and by the work in [8]b. Both of these works exploit the intrinsic structure of the DWT coefficients to devise a reconstruction procedure which is able to obtain high reconstruction accuracy from a reduced number of CS measurements.

The HCoSaMP therefore well suites spatially localized images encountered in resource-constrained CS applications, such as field monitoring in sensor network. For completeness sake, we also consider the case of natural image acquisition. We have considered the CS acquisition of the fragment in Figure 1B extracted from the test image ‘Peppers’ with M=1,500 measurements and K=1,024. We show in Figure 4 the reconstruction MSE obtained by HCoSaMP and CoSaMP, and in Figure 5 the reconstructed images obtained at convergence by the HCoSaMP, the model-based CS [2], the work in [8], and the classical CoSaMP. As for the case of spatially localized fields, inspection of Figures 4 and 5 confirms that the HCoSaMP still performs better than or equally to selected state-of-the-art approaches when compared on a fair basis. In particular, for fairness sake, it must be noticed that the HCoSaMP competitor [8] relies on specific priors on the coefficients of a natural image in the DWT domain. In order to employ such a priori knowledge within stochastic annealing procedures, it requires a high computational complexity; besides, it has the drawback to poorly perform when the starting assumptions fail to hold, as we show in the following Section 5.2.

The herein presented HCoSaMP has the merit of relaxing any a priori assumption but the NAP property in the sparsity domain. In the following, we investigate the case of a texture image, where typical assumptions found in dealing with natural images do not hold, and we show that the HCoSaMP outperforms selected state-of-the-art works, due to its looser assumptions which best cope with application cases where approaches designed for natural image have poor performance.

5.2 Compressible signals in the GBT domain

The graph-based transform (GBT) has been recently introduced in the framework of video coding [16] to provide a novel representation domain which is able to efficiently capture image discontinuities. The GBT relies on an image-dependent basis suitably built to accommodate for image boundaries and abrupt luminance discontinuities; because of this peculiar structure, it has been employed in the framework of depth map coding [21]. The GBT can be also applied as a sparsifying representation for texture images [9], which are hardly compressible in classical transformed domains such as the DWT or the discrete cosine transform (DCT) domains. Here, we show by numerical examples that texture images satisfy the NAP in the GBT domain and are therefore viable of being reconstructed using the HCoSaMP.

The GBT domain is identified by an image-dependent orthonormal basis built up on the image edge map, and in principle, it requires the knowledge of the image boundaries for its evaluation.

This notwithstanding, the GBT, being strongly related to the image structure, can be applied in all those applications where a class of images sharing the same structure can be identified. In these cases, in fact, the GBT basis may be built on a selected image, representative of the whole class, and can then be applied also to the other images in the class. This is indeed the case for texture image, where classes of images sharing the same structure can be easily found, and the GBT may be effectively employed to devise a sparsifying basis for CS acquisition.

Before turning to the presentation of numerical simulation results in this reference scenario, we give a brief sketch on the GBT basis construction. The interested reader can refer to [16] and [21] for more details. Given an N pixel image x, the GBT orthonormal basis is given by the eigenvectors r i ,i=1,…,N, diagonalizing the matrix A built as follows:

where L is a binary N×N adjacency matrix whose element lu,v is set to 1 if the pixels u and v are not separated by an image edges, and it is set to 0 otherwise. In Figure 6, we show an example of a 64×64 fragment extracted from the D104 Brodatz texture (available in [22]), along with its GBT representation and a selected vector from the GBT basis. Inspection of Figure 6 shows how the GBT domain is able to compactly represent the texture image; besides, the eigenvector in Figure 6C clearly confirms the strong bind among the GBT basis elements and the image structure.

To show how the GBT provides a better basis to provide compressibility for texture images, we plot in Figure 7 the coefficient vectors of GBT and DWT (the latter is obtained by the Daubechies wavelet transform) of the texture D104 in Figure 6A. It easily recognized the higher compressibility attained in the GBT domain.

Besides being a suitable representation basis to devise a compressible representation for texture images, the GBT domain is also characterized by the NAP. To assess this property, we respectively show in Figure 8A a 64×64 pixel fragment cropped from the D49 Brodatz texture along with the reconstructed version obtained by retaining only the first 1/8 (Figure 8B) and 1/16 (Figure 8C) of the GBT coefficients.

As for natural images and spatially localized signals in the DWT domain, texture images satisfy the NAP in the GBT domain so that the HCoSaMP algorithm can be effectively employed for CS texture acquisition.

For the running of the HCoSaMP, we have considered the supports constituted by the first 1/256, 1/64, 1/16, 1/4 and 1/2 GBT coefficients.

To test the performance of the HCoSaMP, we have compressively sampled the D49 Brodatz texture in Figure 8A with M=2,000 and K=1,024. In Figure 9, we plot the MSE attained by the HCoSaMP and by the classical CoSaMP. Results confirm the effectiveness of the HCoSaMP in hierarchically recovering the GBT coefficients. Qualitative results are found in Figure 10 showing the results obtained by the HCoSaMP algorithm at different iteration steps. In Figure 11, we also provide a comparison of the reconstructed images obtained by the different tested algorithms. Specifically, as for the case of natural and spatially localized images, we have compared the performance of the HCoSaMP with the model-based CS [2], with the approach in [8] and with the classical CoSaMP algorithm. Remarkably, since the structure of the GBT coefficients differs from the DWT one, state-of-the-art works relying on the DWT structure fail to attain satisfactory reconstruction quality. Still, a dissertation is in order. The work in [8] relies on the assumption that the representation exhibits a tree structure such as the one characterizing the DWT coefficients; whenever this assumption fails to hold, as in the case of the GBT representation, the recovery exhibits poor performance. The work in [2] is more general and can accommodate for different structures in the transformed domain. Here, we have employed the Matlab code provided by the authors in [23], designed for tree-structured signals; better results may be attained by adapting the model-based CS to the specific structure of the coefficients in the GBT domain.

We observe that the recovery of texture images from compressive sampling measurements is an open research challenge and could benefit from more complex texture generation models, as those envisaged for texture classification purposes [24, 25]; the herein presented results pave the way for further studies on this issue.

6 Conclusion

In this paper, we have presented a reconstruction procedure, which we called HCoSaMP, for recovery of compressively sampled signals characterized by the so-called nested approximation property. This property can be found in a wide range of applications where other stronger hypotheses - e.g. tree coefficients’ structures - fail to hold. The convergence of the HCoSaMP procedure is analytically demonstrated. Besides, the HCoSaMP procedure is validated by numerical simulation results and performance comparison with state-of-the-art reconstruction procedures. The adoption of the HCoSaMP is beneficial in resource-constrained applications where the cost of the measurements is high [6] and the number of collected measurements does not suffice for the CoSaMP procedure to converge. Finally, the herein presented analysis draws a path towards hierarchical solution of different recovery algorithms for which robust convergence is guaranteed.

Endnotes

a The energy of the error vector e0 is bounded by ; as will be clarified in the following, such error, in the HCoSaMP convergence, plays much the same role played by the approximation error in the CoSaMP convergence on non-sparse, compressible signals.

b To implement the reconstruction algorithms described in [2] and in [8], we have employed the MatLab codes provided by the authors, available respectively in [23] and [26].

Appendix 1

Proof.

(Proof of Lemma 4.1) Let us consider the set , as in Algorithm 1, and let us define

Within these settings, b represents the LS estimate of the signal samples evaluated on the support T. We prove that the energy of the estimation error is upper bounded by a term proportional to the energy of the signal α0 over the support TC and by a term proportional to the measurement noise energy:

Let us start by noting that the set T is defined as the union of two proper subsets of , so that its cardinality is upper bounded by . Keeping this in mind, let us consider the Euclidean distance between b and α0. We can write

Simple algebra leads to

Finally, noting that , we come up with the following upper bound (cfr. also Lemmas 1 to 3 in [1]):

Under the assumption that , the expression in (13) is evaluated as follows

This concludes the proof.

Appendix 2

Proof.

(Proof of Lemma 4.2) Let us recall the starting positions as found in (16):

Let us first denote that α0 is confined to by definition and that is confined to by construction. Then, the signal s exhibits at most nonzero coefficients. Then, let us define Σ=supp(s). As s is at most sparse, then we have . Now, because of the NAP property (cfr. [2]), the set ω0 is the one collecting the indices providing the support of the best approximation of u within the set , so that, following the clear guidelines posed in [1], we can write the following derivations:

Resorting to several disequalities provided in [1] (namely Lemmas 1 to 3 in [1]), omitted here for the sake of compactness, we derive the following bounds on the terms in (34):

By injecting (35) and (36) in (34), we come up withc

where we have set due to the fact that Σ is defined as the support of s. As, because of (32), , we obtain the expression in

References

Needell D, Tropp JA: CoSaMP: Iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmonic Anal 2008, 26: 301-321.

Baraniuk RG, Cevher V, Duarte MF, Hegde C: Model-based compressive sensing. IEEE Trans. Inform Theory 2010, 56(4):1982-2001.

Colonnese S, Cusani R, Rinauro S, Scarano G: Bayesian prior for reconstruction of compressively sampled astronomical images. In 4th European Workshop on Visual Information Processing. EUVIP 2013, Paris; June 2013.

Baraniuk RG, Hegde C: Sampling and recovery of pulse streams. IEEE Trans. Signal Process 2011, 59(4):1505-1517.

Eldar Y, Mishali M: Robust recovery of signals from a union of subspaces. IEEE Trans. Inf. Theory 2009, 55: 5302-5316.

Colonnese S, Cusani R, Rinauro S, Ruggiero G, Scarano G: Efficient compressive sampling of spatially sparse fields in wireless sensor networks. EURASIP J. Adv. Signal Process 2013, 136: 1-19.

Fazel F, Fazel M, Stojanovic M: Random access compressed sensing for energy-efficient underwater sensor networks. IEEE J. Selected Areas Commun 2011, 29(8):1660-1670.

He L, Carin L: Exploiting structure in wavelet-based Bayesian compressive sensing. IEEE Trans. Signal Process 2009, 57(9):3488-3497.

Colonnese S, Rinauro S, Mangone K, Biagi M, Cusani R, Scarano G: Reconstruction of compressively sampled texture images in the graph-based transform domain. In IEEE International Conference on Image Processing (ICIP). Paris; 27–30 October 2014.

Candes EJ, Tao T: Decoding by linear programming. IEEE Trans. Inform. Theory 2005, 51: 4203-4215. 10.1109/TIT.2005.858979

Rudelson M, Vershynin R: On sparse reconstruction from Fourier and Gaussian measurements. Comm. Pure Appl. Math 2008, 61: 1025-1045. 10.1002/cpa.20227

Vershynin R: On the role of sparsity in Compressed Sensing and Random matrix theory, CAMSAP’09. In 3rd International Workshop on Computational Advances in Multi-Sensor Adaptive Processing. Aruba, Dutch Antilles; 13–16 December 2009.

Bah B, Tanner J: Improved bounds on restricted isometry constants for Gaussian matrices. J. SIAM J. Matrix Anal. Appl 2010, 31(5):2882-2898. 10.1137/100788884

Mailhe B, Sturm B, Plumbley MD: Behavior of greedy sparse representation algorithms on nested supports. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Vancouver, Canada; 26–31 May 2013.

Liu Y, Pados DA: Decoding of framewise compressed-sensed video via inter-frame total variation minimization. SPIE J. Electron. Imaging Special Issue Compressive Sensing Imaging 2013, 22: 1-8.

Lee S, Ortega A: Adaptive compressive sensing for depthmap compression using graph-based transform. In Proceedings of International Conference on Image Processing (ICIP) 2012. Orlando, FL, USA; 30 September to 3 October 2012.

Yap HL, Eftekhari A, Wakin MB, Rozell CJ: The restricted isometry property for block diagonal matrices. In 45th Annual Conference on Information Sciences and Systems (CISS). IEEE, Baltimore; 23 Mar to 25 Mar 2011.

Eftekhari A, Yap HL, Rozell CJ, Wakin MB: The restricted isometry property for random block diagonal matrices. 2012.

Needell D, Ward R: Stable image reconstruction using total variation minimization. SIAM J. Imaging Sci 2013, 6.2(2013):1035-1058.

Jet Propulsion Laboratory . Accessed 26 May 2014 http://ourocean.jpl.nasa.gov

Cheung G, Kim W-S, Ortega A, Ishida J, Kubota A: Depth map coding using graph based transform and transform domain sparsification. In Proceedings of International Workshop on Multimedia Signal Processing (MMSP). Hangzhou, China; 17–19 October 2011.

Randen T: Brodatz textures. . Accessed 26 May 2014 http://www.ux.uis.no/~tranden/brodatz.html

Baraniuk RG, Cevher V, Duarte MF, Hegde C: Model-based Compressive Sensing Toolbox v1.1. . Accessed 26 May 2014 http://dsp.rice.edu/software/model-based-compressive-sensing-toolbox

Campisi P, Neri A, Scarano G: Reduced complexity modeling and reproduction of colored textures. IEEE Trans. Image Process 2000, 9(3):510-518. 10.1109/83.826788

Campisi P, Colonnese S, Panci G, Scarano G: Reduced complexity rotation invariant texture classification using a blind deconvolution approach. IEEE Trans. Pattern Anal. Mach. Intell 2006, 28(1):145-149.

Carin L, Ji S, Xue Y: Bayesian Compressive Sensing code. ~lcarin/BCS.html. Accessed 25 May 2014 http://people.ee.duke.edu/ ~lcarin/BCS.html. Accessed 25 May 2014

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Colonnese, S., Rinauro, S., Mangone, K. et al. Hierarchical CoSaMP for compressively sampled sparse signals with nested structure. EURASIP J. Adv. Signal Process. 2014, 80 (2014). https://doi.org/10.1186/1687-6180-2014-80

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-6180-2014-80